Publications

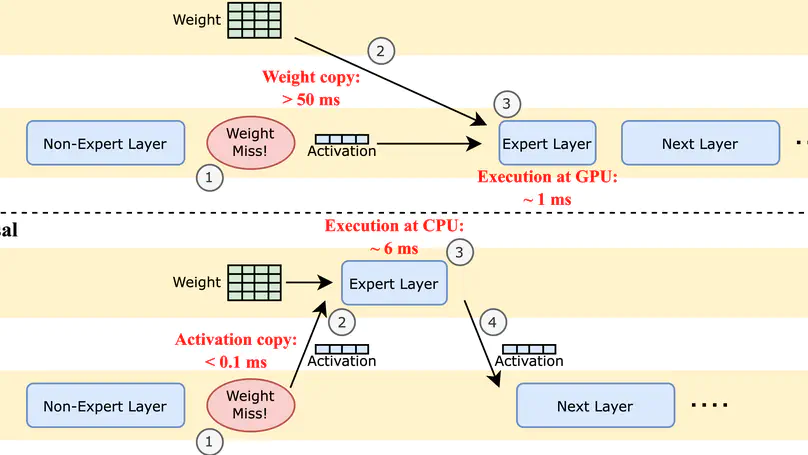

- Identified the underutilization of resources within a single device in existing serving systems due to sequentially executing operations with various resource requirements

- Overlapping the utilization of different resources within a single device through operation co-scheduling for higher resource utilization